Google has just taken its first steps towards transparency when it comes to AI-generated images, by announcing SynthID; a watermarking tool for generative art.

The announcement came from Google DeepMind today, which says the new technology will embed a digital watermark that’s ‘invisible to the human eye’ directly onto the pixels of an image.

As it stands, SynthID will first be rolled out to ‘limited number’ of customers who are currently using Imagen, Google’s very own AI art generator available as part of an array of AI tools offered by the company.

As an artist myself, my first reaction is to welcome this step, as the ethical implications of stealing artists’ work is a big deal. However, there’s also the issue of deepfakes, a phenomena that’s becoming much more commonplace online.

And, instead of deepfaking the Pope in funky new hip-hop gear, or Steve Buschemi in a dress at the Golden Globes, they could be used to inflict a lot more damage – especially when it comes to politically motivated content.

That’s why seven AI companies made the commitment earlier in the year of “watermarking audio and visual content to help make it clear that content is AI-generated” after a meeting at the White House.

However, Google being trailblazer it is, is the first to actually put something like this in place.

So, how does it work? Watch the video, below:

There’s not a huge deal of information about the technical workings of SynthID (probably for security purposes), but it has said the watermark cannot be easily removed via standard editing techniques.

Here’s what SynthID project leaders Sven Gowal and Pushmeet Kohli wrote in today’s Deepmind blog on the subject:

Finding the right balance between imperceptibility and robustness to image manipulations is difficult. Highly visible watermarks, often added as a layer with a name or logo across the top of an image, also present aesthetic challenges for creative or commercial purposes. Likewise, some previously developed imperceptible watermarks can be lost through simple editing techniques like resizing.

We designed SynthID so it doesn’t compromise image quality, and allows the watermark to remain detectable, even after modifications like adding filters, changing colours, and saving with various lossy compression schemes — most commonly used for JPEGs.

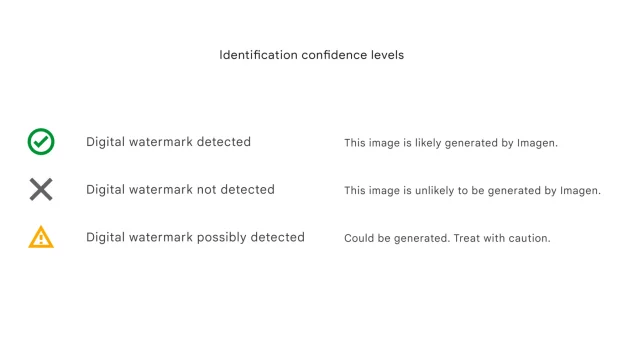

SynthID is made up of two deep learning models working in sync with each other; one for watermarking, the other for identifying. These were trained on a diverse plethora of images, according to the company.

According to Gowal and Kohli:

The combined model is optimised on a range of objectives, including correctly identifying watermarked content and improving imperceptibility by visually aligning the watermark to the original content.

However, don’t get too excited. Google admits this is NOT the perfect solution, and adds it “isn’t foolproof against extreme image manipulations”. However, it does describe SynthID as “a promising technical approach for empowering people and organisations to work with AI-generated content responsibly.”

The tool could, apparently, expand to other AI models such as ChatGPT. Which is cool, I guess.

Let us know what you think in the discussion area below.